In the rapidly evolving world of technology, artificial intelligence (AI) continues to push the boundaries of what machines are capable of achieving. As AI systems grow increasingly sophisticated, a compelling question arises: How intelligent are these systems, and can their intelligence be quantitatively measured similarly to human intelligence? The concept of Intelligence Quotient (IQ), traditionally used to gauge human cognitive abilities, offers a fascinating lens through which to assess. Before doing this analysis, I predict that since LLMs have a strong ability to problem solve, they will have a high, or even perfect IQ.

In this blog, I will be using a 10 question IQ test that covers all the real types of questions asked on a full length IQ test. You can take the test yourself here! https://www.123test.com/iq-test/

Overall score: 7/10

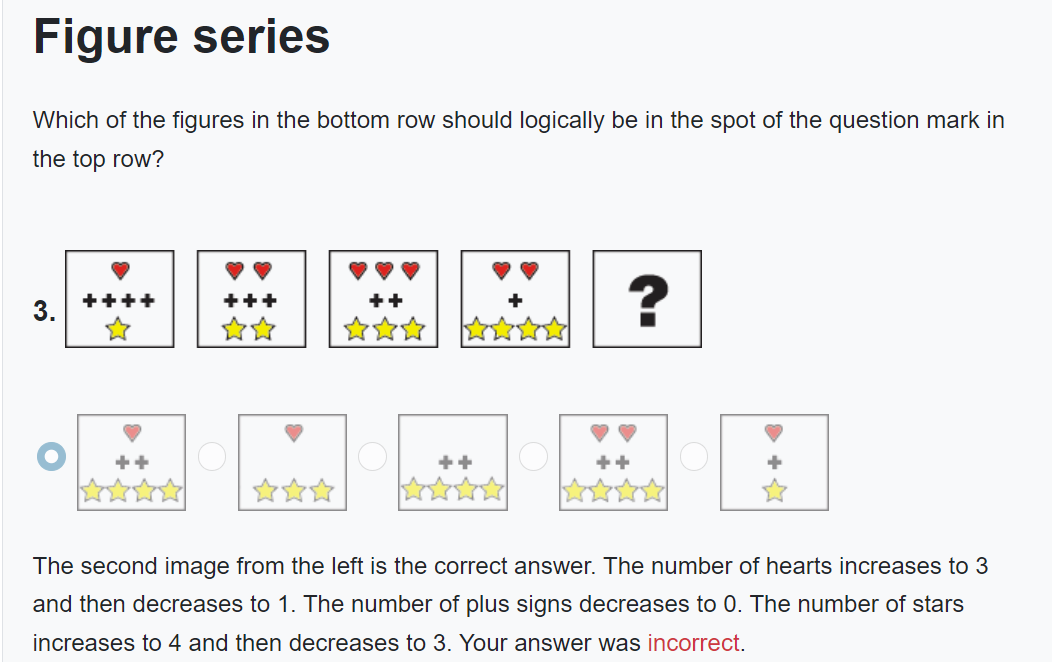

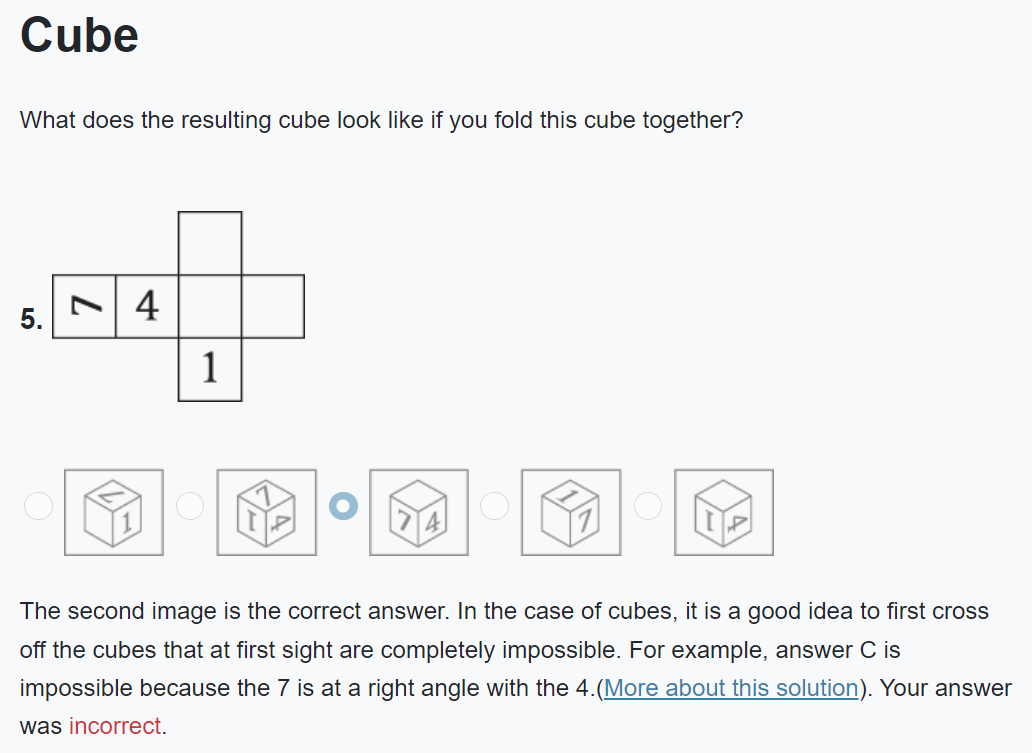

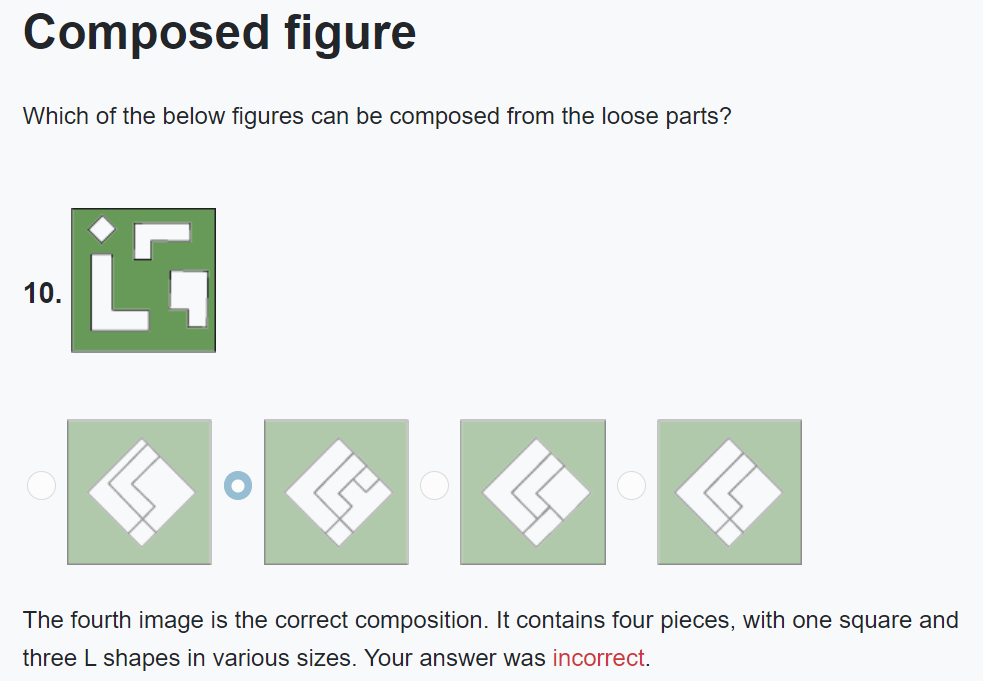

On a human scale, this would be a strong IQ score. However, I was surprised by this result, since I expected the LLM to either get a perfect score or only get one of them wrong. Let’s take a look at which questions the LLM got wrong.

It seems like GPT-4 has difficulty processing visual patterns, even though it was able to identify numerical patterns effectively. This could raise questions about whether the limitations arise from difficulties in processing images or from a lack of “knowledge” about visual patterns. Furthermore, it would be insightful to investigate how this impacts GPT-4’s performance across different types of visual tasks. Overall, this analysis underscores that AI isn’t flawless in problem-solving, highlighting that there are still areas of knowledge that may be accessible to humans but remain challenging for AI.